Generative AI and True Innovation

Composability, tools and creativity across three important innovations

Two recent papers—and one recent game development project using ChatGPT—reveal a great deal about the near-term, exponential capabilities of generative AI.

One paper showed how physical laws could be discovered with optimized neural networks. Another showed how language models can learn to interact with application programming interfaces (APIs). And the last is how a new game was created in only a few hours using ChatGPT.

In this article I’ll review the significance of each—as well as how they relate to each other.

Discovering Physical Laws with Neural Networks

Wassim Tenachi’s Deep symbolic regression for physics guided by units constraints: toward the automated discovery of physical laws (see also: associated GitHub, Φ-SO : Physical Symbolic Optimization) shows how deep reinforcement learning, when properly optimized, can discover physical laws:

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

If you’re familiar with curve-fitting, your initial reaction may be that this is something we do with Fourier analysis and numerical methods. Likewise, you could argue that this is also what an artificial neural network (ANN) does: you can train them to make inferences from a set of inputs that predict real-world observations.

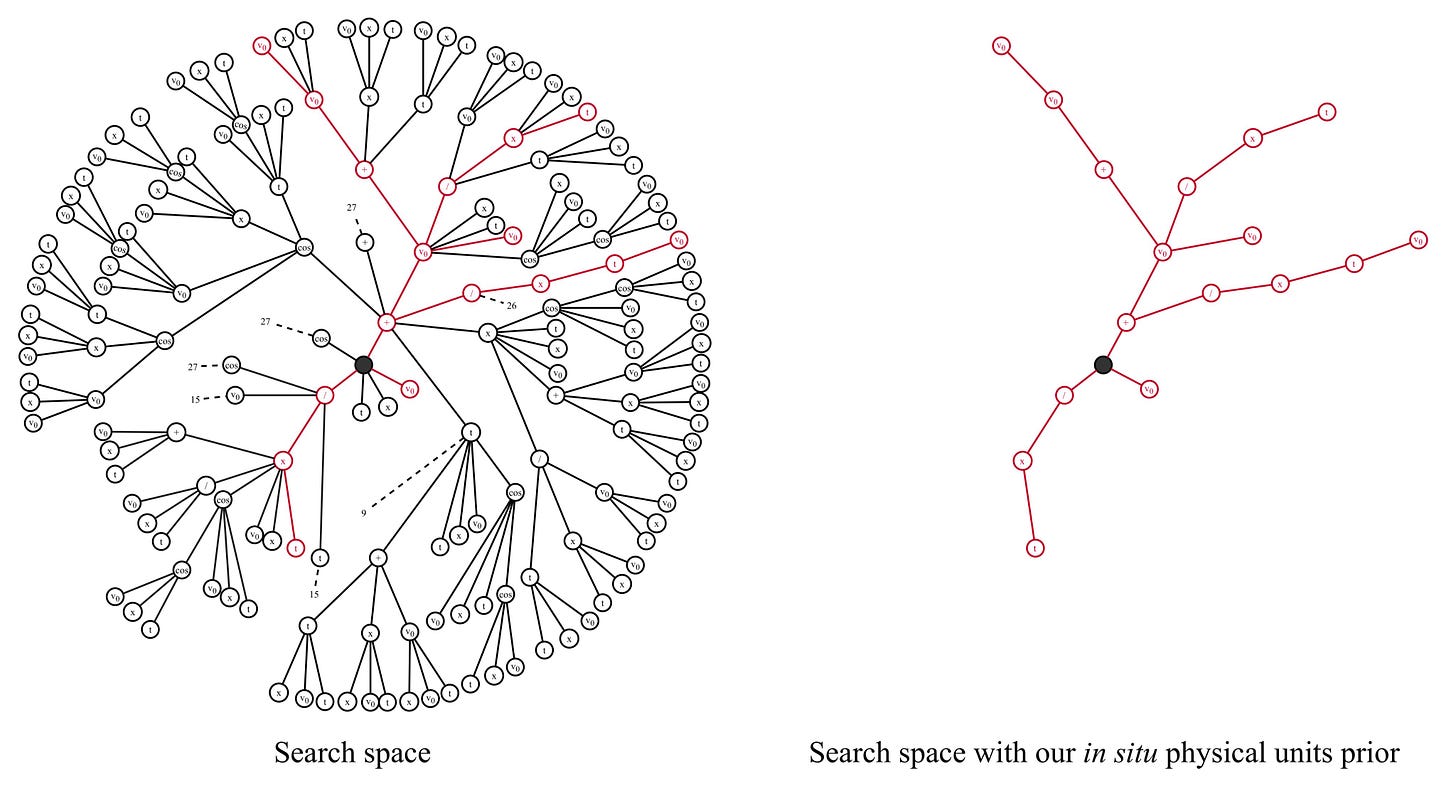

However, a goal in science is to identify the “true” underlying symbolic law—not an approximation of the curve, or a black-box algorithm as in the case of an ANN. One approach called “symbolic regression” (SR). However, a challenge with symbolic regression is how vast the search space is. As noted in the paper, if you consider expressions with 30 components and 15 variables:

A naive brute-force attempt to fit the dataset might then have to consider up to 15^30 ≈ 1.9 × 10^35 trial solutions which is obviously vastly beyond our computational means to test against the data at the present day or at any time in the foreseeable future. This consideration does not even account for the optimization of free constants, which makes SR an “NP hard” (nondeterministic polynomial time) problem

The primary innovation in this paper was realizing that you can substantially reduce the search space to physically-possible units, which results in an exponential improvement in search efficiency:

When this optimization is utilized within a deep reinforcement learning network, it was able to find a number of physical properties that been resistant to other symbolic regression methods. One of the examples in the paper are some of the most famous equations from Special Relativity:

Composing with Tools

Right now, language models like ChatGPT don’t connect to external tools. That sometimes causes it to produce results that are often confusing to people—like seemingly not understanding the current date, or failing at rudimentary logic or arithmetic tasks.

This is a problem that will be solved as language models begin to directly interact with tools.

Language models are good at working with symbolic languages such as programming languages. They’ll become far more powerful as they learn to use APIs to access a wide range of software systems, as was demonstrated in Toolformer: Language Models Can Teach Themselves to Use Tools (Schick et al, 2023):

Now, the important thing to keep in mind is that “tool” will include a vast number of capabilities.

Yes, it could be simple APIs into calendars and calculators.

But it will also include sophisticated, intelligent systems—such as the methods shown in the Φ-SO project discussed earlier: systems capable of not only surfacing simple objective facts, but conducting searches and discovering new properties.

This is what “composability” really means. It is about structuring knowledge and systems into a form that allows them to be easily-composed into others.

Natural language is the most human compositional framework.

Generative Game Design

Daniel Tait showed how generative AI could be used to create a new game design. In a few hours, he created a game called Sumplete with the help of ChatGPT—both the design ideas, as well as the code itself. It is a game based on Sudoku that involves deleting numbers:

Now, it turns out that this is not a completely new puzzle idea. But that misses the point: the vast majority of work we call “design” is not actually inventing completely new ideas. It is applying existing forms and best practices towards problems you’re working on.

In the case here, Tait may not have come up with a game system we’ve never seen before—but he used generative AI to locate a design and apply it. Other “searching” methods Tait could have used to discover this pattern would likely have required a lot of specialist expertise or domain knowledge.

A huge amount of effort is spent simply reinventing ideas for which there are already good examples (consider that a medical researcher, who apparently didn’t know about integration, effectively rediscovered calculus and proceeded to get dozens of citations!)

A massive amount of effort will be saved as generative AI uses metaphors and complex pattern-matches to surface established designs for us to apply to our work.

And then we can put our mental brainpower against the problems that are actually novel… or send the toughest problems out to networks of intelligent tools that make fresh discoveries.

Further Reading

The spirit of this article is composability, which is the most powerful creative force in the universe.

Read about the Sumplete game—and experiment in ChatGPT with your own games—by following the guide to how Sumplete it was created.

Tenachi’s paper: Deep symbolic regression for physics guided by units constraints: toward the automated discovery of physical laws

Read about how APIs could be used by language models: Toolformer: Language Models Can Teach Themselves to Use Tools

If some of the concepts in this article are new to you, I recommend reading The Generative AI Canon.

Thanks for summing up these papers

- all truly exciting developments, especially the novelty in generative game design.

Need to play Sumplete tonight 🤗

Also, when games can be built and published so easily, who will have time to play them all?