Metaverse Interoperability, Part 1: Challenges

Many people see interoperability — the ability to unify economies, avatars and systems across worlds— as one of the defining properties of…

Many people see interoperability — the ability to unify economies, avatars and systems across worlds— as one of the defining properties of the metaverse.

My intention in this article is to open-source my thinking, starting with the recognition of the many challenges facing interoperability.

In Part 2 of this series, I’ll talk about one of the main purposes behind interoperability: which is composability, the ability to creatively combine interoperable components together

Problems of Interoperability

There are three fundamental problems of interoperability:

Technology — the systems for enabling systems to interoperate; basically the engineering problem.

Economic Alignment — providing the economic incentives so that groups and creators gain mutual benefits by participating in an interoperable ecosystem. This is a social and business problem.

Making it Fun — even if you have the technology and the economic incentives in place, it doesn’t mean it is fun and that consumers will want it. This is a design problem.

Domains of Interoperability

People frequently conflate one domain of interoperability with another, and that adds confusion to how people think about the challenges and opportunities. This chart illustrates the major domains of interoperability with respect to the metaverse:

Connectivity

The connectivity level deals with the ability for various devices to connect with each other. This is largely a solved problem thanks to TCP/IP, ethernet, cell network standards, HTTP for websites, etc. But I think it’s important to remember that we already have high levels of interoperability at this level, and it is one of the reasons I describe the metaverse as the next generation of the internet — not simply one kind of product inside the internet.

Persistence

This domain deals with the problem of maintaining state in the metaverse. One of the most basic forms of persistence is the job of storing the state of the economy and how that relates to individual identity: who owns what? This accounting is a basic property in almost every online game: who has the Godslayer Sword?

There are two main ways this can be done:

A centralized database where a company is trusted to preserve this state. Online games have almost always done this on their own, using owned-and-operated databases.

A decentralized storage system such as those created by various blockchains, where nobody needs to trust any one company.

In addition storing the state of the metaverse’s economy, the persistence layer may include other aspects like where objects are located and attributes are stored.

Presentation

What does everything look like? How does it appear to the metaverse user?

We have technology standards for the technical interchange of presentation data: this is everything from JPG and PNG files for two-dimensional graphics, to various 3D mesh files and the Universal Scene Description format invented by Pixar, to obsolete formats like VRML.

The challenges of the presentation layer aren’t really around the technologies: they’re around divergent design goals pertaining to aesthetics and accessibility. Is the presentation of the world going to be something “low fi” and voxel-based like Minecraft or The Sandbox? Photorealistic like Unreal’s metahumans? Or something in between? And what if something is 2D, like Gather.town?

Meaning

How something looks doesn’t tell you what something really means; but for a virtual world, this is crucial: meaning is how an object is interpreted.

For example, when you put on your robe and wizard hat… what does that actually mean in a particular world? In one world that might mean you go to Hogwart’s. In other it might be entirely cosmetic, like a Halloween costume. In other it might mean something entirely different.

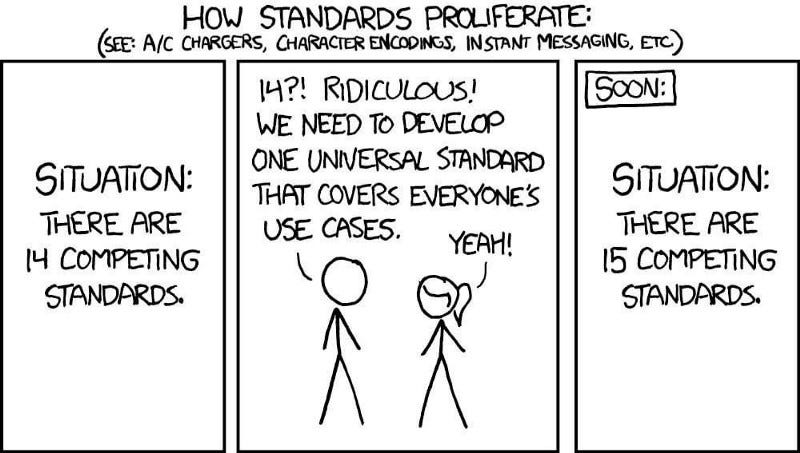

The World Wide Web has had a long history of failed attempts (the “semantic web”) to create universal standards for meaning. In principle, a semantic web would be extremely powerful: you’d be able to search and compare content according to a database of agreed-upon attributes.

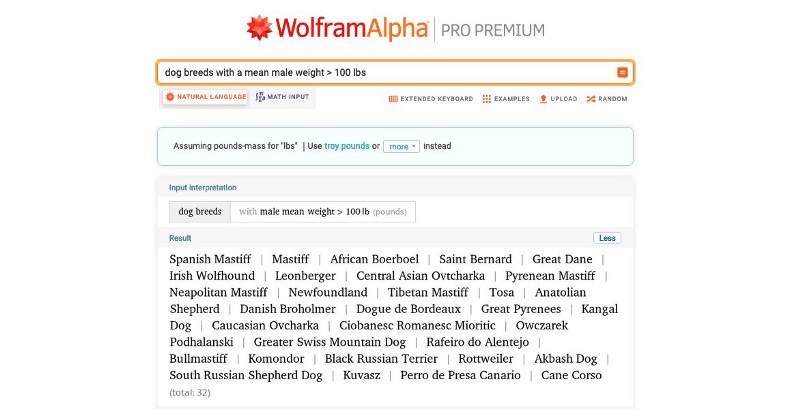

Wolfram Alpha does this on a walled-garden basis by working with domain experts to categories and standardize sets of information:

Wolfram Alpha’s structure is reminiscent of circa-2000 Yahoo, with a hierarchy of top-down categories you can play with. The idea of a semantic web would be to allow everyone to publish web pages that add new knowledge to the internet so that we could go from hierarchically-managed data a la Yahoo to a search engine like Google.

Sounds awesome, right? So why did the semantic web fail?

Gaming the system. People already game the content on their webpages to benefit from Search Engine Optimization. Once specific categories and attributes are assigned, it actually becomes easier to game the system because people know exactly what attributes they should fudge to benefit their economic incentives.

It’s hard to get universal alignment on meanings. We can’t really agree on what “metaverse” even means (I have my version) so that’s a good microcosm to consider how hard it is in every other subdomain of meaning: everyone has their own economic incentives, their own values, their own priorities, their own perceived incompatibilities.

Newer technologies reduced the need for the semantic web. Better search and artificial intelligence are often doing a better job than human-curated meaning, and developed more rapidly than semantic standards.

Meaning gets even more complicated in the metaverse, because we’re usually talking about real-time activities in immersive spaces. I’ll return to this as I explore the myths and misunderstandings in greater depth: read on.

Behavior

Finally, the meaning of things needs to be mapped towards behaviors that exist within a system; where presentation and meaning are the “nouns” in a world, behavior defines the “verbs” for how these actions change the world.

For example, in a game system the Godslayer Sword might be meant to be uber-powerful. But how do you assign this meaning to an actual behavior in a particular game system? There are different game systems, different worlds, and different ways of interpreting a Godslayer Sword. And the majority of games that exist won’t care about gods, swords, or slaying.

Whereas the persistence domain provides the ability to preserve the state of the economy, the actual economy rises out of all of the behaviors that happen within a world or across multiple cooperating worlds.

Internal vs. External Interoperability

Internalized interoperability is the standardization of one or more of the domains (persistence, presentation, meaning, behavior) within the various experiences, sub-worlds and games that are created within a given walled-garden. Second Life was probably the first platform to do this on several domains. Roblox did this at massive scale by focusing on simplicity and accessibility (250M+ MAU).

Externalized interoperability is the standardization of certain domains across an ecosystem of cooperating products.

You can see this in cases like the Ready Player Me avatars that work in environments owned by different companies. The video below shows how these avatars can be ported into VRChat and Animaze:

The same avatar could be brought into a large number of other off-the-shelf games and environments as well.

External interoperability can happen by degrees. At one extreme, the interoperability could be “global” — for example, we already have global standards for Connectivity. At the other extreme, you could have individual worlds that don’t need to agree with others: for example, having certain meanings and behaviors that only apply only to things inside that world. In between, you could have constellations of projects that benefit by sharing certain systems: examples might include an avatar system, a market economy, or even an intellectual property that’s used as a setting.

Multi-domain vs. Targeted Interoperability

Multi-domain interoperability is the idea that a body of interrelated standards ought to be adopted by “metaverse” companies that unite multiple domains. The most comprehensive version of this would require that experiences in the metaverse agree upon the same connectivity, persistence, presentation, semantics, and behaviors.

Targeted interoperability is the idea that one of these domains — or even just a subdomain — ought to be the target of an effort for interoperability. For example, you could have standards for the presentation of avatars that have nothing to do with standards for persisting the ownership of property in the metaverse.

Myths and Misunderstandings

I’ve observed that most of the confusion around interoperability is due to conflating the categories: for example, assuming that interoperability of persistence requires interoperability of meaning.

Another is the assumption that external interoperability must be global for it to be useful. For example: a belief that unless absolutely everyone uses the same avatar system then it isn’t useful.

These myths and misunderstandings exist across the spectrum of metaverse idealists to metaverse cynics.

In Part 2 of this series, I dive into composability, which is the primary benefit of interoperability. And yes, I’ll be talking about specifics like NFTs, avatars, development tools and virtual world spaces— and how some of these systems are already affecting developers and consumers.

Further Reading

Composability is the Most Powerful Force in the Universe is part 2 in this series on interoperability.

The Metaverse Value-Chain explains the 7 layers of the metaverse industry; interoperability affects every one of these layers.

If you’re interested in the role of Web3 and blockchain, I’ve written about that in Web3, Interoperability and the Metaverse.

I’ve previously written about NFTs in Game Economics, Part 2: NFTs and Digital Collectibles.