Existential Threats and Artificial Intelligence Solutions

How AI is a net-positive for managing existential risks to humankind

I wish to frame a set of ideas for why I find that the human species faces greater existential risks by not pursuing advanced artificial intelligence than doing so.

In the past week an “open letter” was published asking for a 6-month halt in AI advancements more advanced than GPT-4—essentially premised on the safety risks of AI. I’m not going to focus on their claims in this article1, but instead wanted to focus on the other existential risks facing the human species—and the role AI has to mitigate them.

Someone even called for a circumstance where an airstrike against GPU clusters would be justified, something that would likely increase global existential risks.2

Lists of existential risks to humanity frequently include nuclear war, pandemics, climate change, biotechnological terror, asteroids, supervolcanoes and various forms of cosmic doom. Let’s explore how AI can help with each of these:

Nuclear War

The most significant existential threat to humanity that is presently an actual possibility is nuclear war. But artificial intelligence could help prevent one.

But let’s first review the sobering facts of nuclear war, to put it in perspective relative to the imagined threats of artificial intelligence:

In 1945, the Hiroshima bomb killed an estimated 200,000 people. and radioactivity caused deaths of tens of thousands more in the ensuing weeks. It was a weapon unlike any in its total destruction.3

Since then, we’ve developed weapons that are thousands of times more powerful—and between the United States, Russia and a few other countries, we have many thousands of powerful weapons.

A “limited” nuclear exchange could easily lead to nuclear winter and extreme loss of life; a major war would be an absolute catastrophe for life on the planet. In a war that used “only” 3% of the current stockpiles of nuclear weapons, a 2022 study published in Nature Food estimated that it would kill a third of the world population.

Artificial intelligence can help. From an intelligence perspective, language models and image detection could sift through vast amounts of open-source information about traffic, signals and social media to help make predictions about weapons…

But perhaps language models will also help us understand each other better, to find common ground to currently-intractable problems, and to negotiate better. In 2022, the Meta AI team showed that AI could be used to play Diplomacy better than humans.

![[video-to-gif output image] [video-to-gif output image]](https://substackcdn.com/image/fetch/$s_!MuMT!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fd2d6d1c8-f514-477b-9d8d-5a375d9825c5_600x314.gif)

The significance is that an AI was able to use language to negotiate with humans and obtain cooperation. Although Diplomacy is “just a game,” it is part of the continuum that began with Chess and Go. Games like Diplomacy and Poker (for which an AI has also achieved superhuman performance) are different in that they deal with asymmetric information and deception.

In time, international negotiators will be supported by AI that help search for ideas to support deescalation and consensus—while the humans will supply the trust and empathy. Research that is nearly two decades old already showed that agents with primitive AI might be used to assist in crisis resolution—and that was long before the emergence of powerful language models. The MIT Media Lab’s Scalable Cooperation group tracks a list of research in the area of AI-assisted negotiation and cooperation.

As with many technological risks, human relationships are a primary problem when it comes to the risk of nuclear war. AI can help.

The Yuddites see it as potentially worth conducting airstrikes against GPU clusters; it is hard to imagine how this wouldn’t increase the risk of a nuclear exchange. Ironically, maybe AI could help negotiate ways to avoid those who might use violence to achieve an anti-AI agenda.

Pandemics

Artificial intelligence is enabled by the vast amounts of training data available on the internet today.

Consider one interesting source of data: the finding that “loss of smell” in Amazon reviews correlated to Covid-19 prevalence:

This example isn’t AI—it is just looking at the data, and using fairly basic text associations from the reviews. But it speaks to the large volumes of untapped data that are present in social media, product reviews and online content. There must be countless “hidden layers” within this data that could be used to detect new diseases before they spread, not to mention a large number of other global threats.4

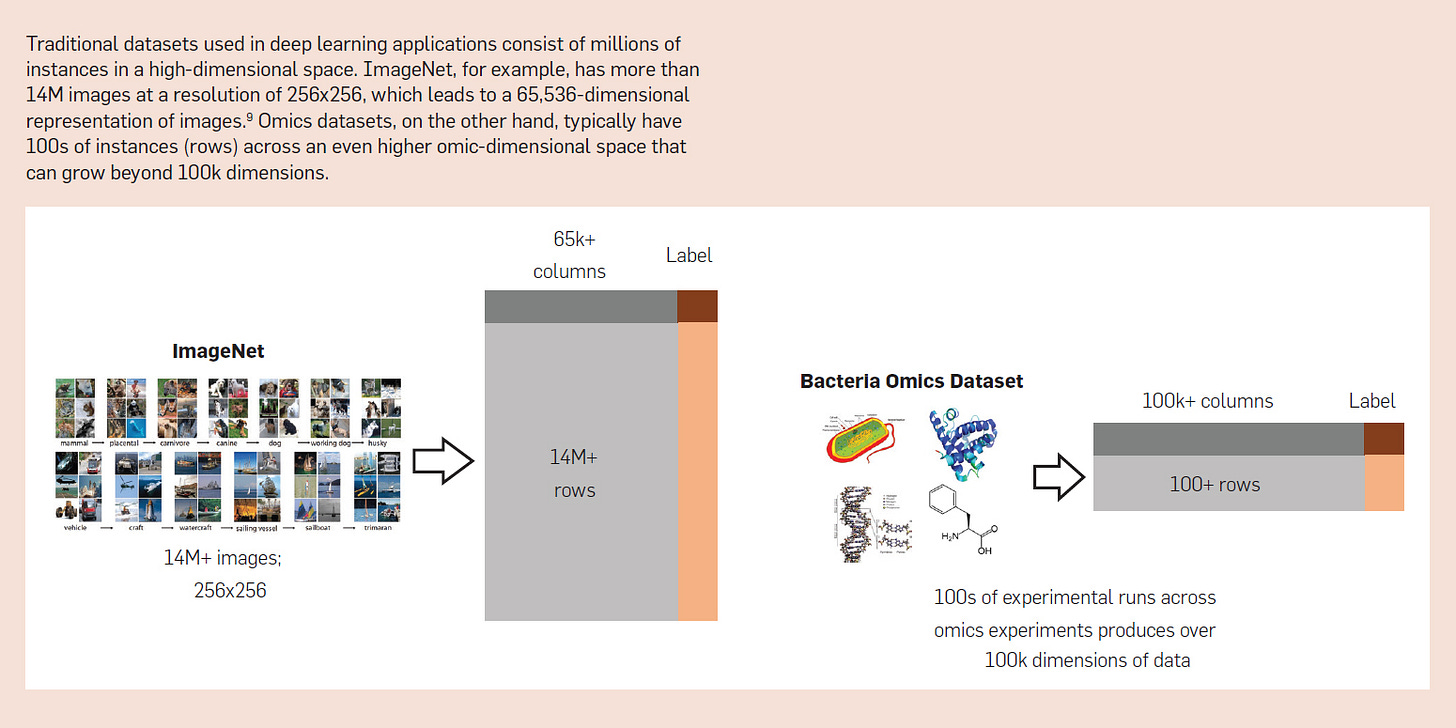

Understanding the human genome, proteome and metabolome are central to predicting the performance of vaccines and drugs. AlphaFold predicts the 3D structure of 200 million proteins based on the genetic data. 5

Similarly, AI may be used to decipher the metabolome—the 100,000+ small molecules involved in the networks of our metabolism, at the center of many disease such as cancer.

AI will accelerate our ability to understand, predict, prevent and cure disease. One of the greatest improvements in improving human wellbeing will come from this application of AI to medicine—and is something that we must use to mitigate pandemics.

Biotechnology Risks

Just as AI has a critical role to play in curing disease and mitigating pandemics—it can also help improve the world through biotechnology. But of course, biotechnology has the ability to harm as well as help.

Here again, AI can help: by predicting the risks associated with synthetic biology. The number of data points within biological systems is staggering—a good application for deep learning, which is better than humans at deciphering the “dark matter” of knowledge. Within the vast data about genomes, organisms, clinical trials and experiments—far more than any human or any research institute can understand holistically—is a growing body of data that can improve the world as well as mitigate the risks associated with bad actors.

Bad actors will continue to work on biotechnological terrors—whether or not they have access to AI. AI has the power to help with biodefense: early detection and fast response. AI-enabled biosecurity can be implemented to reduce the risk of “lab leaks,” improve security protocols and identify threats.

And just as language systems may help improve negotiation: they can also help collaboration, networking together the vast plurality of researchers who produce knowledge with an intention to help the world.

Climate Change

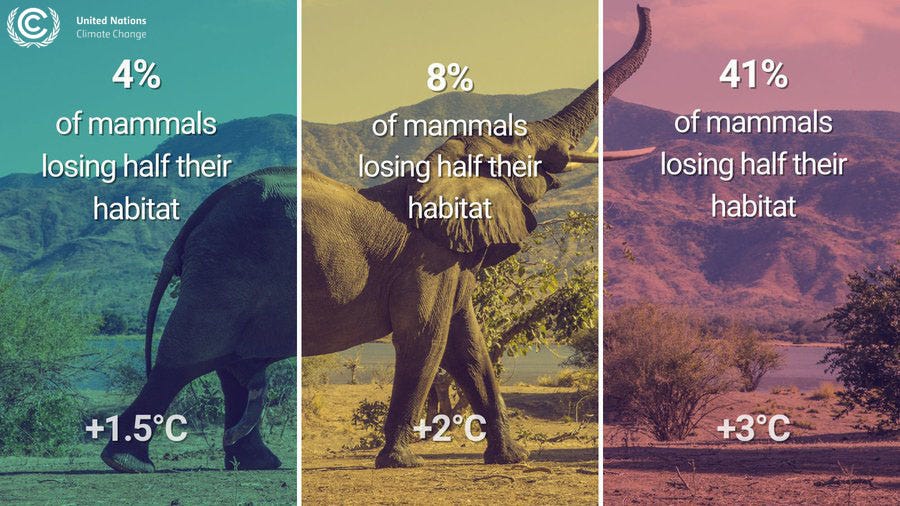

The impact of our warming climate may include a massive reduction of biodiversity (both a moral crisis, as well as the loss of a pragmatic “insurance policy” against the risks of monoculture), shortages of food resulting in droughts and famine, destruction of coastal real estate, geopolitical stress caused by mass migrations, and an increased risk of armed conflicts.

How can AI help?

First, by improving our ability to model the Earth and predict the climate. This is the goal of NVIDIA’s Earth-2, which seeks to create a “digital twin” of the Earth itself.

Just as language models may help arrive at consensus between adversaries (as noted above), it may help bring nuance and better negotiating to the various parties who disagree over the degree of climate change as well as the appropriate policies to implement.

But the greatest benefit of AI is likely to be in the area of science and engineering—to both prevent and mitigate the impact of climate change.

AI can help support decarbonization efforts through better allocation of resources and reducing dependence of carbon within supply chains. As far back as 2016, DeepMind used AI to reduce the cooling costs of their datacenters by 40%.

AI can help enable sustainable and precision agriculture,

AI can help optimize energy networks, improve solar energy performance, and improve efficiency of battery-powered systems, and discover new materials and designs for batteries. It could help design better systems for carbon sequestration.

Asteroid Impacts

NASA already uses machine learning to improve the detection and prediction of dangerous asteroids. China’s National Institute of Natural Hazards is creating simulations of asteroid impacts. And if we did find an asteroid that is on course to impact the Earth, then AI can help us figure out a method to deflect it.

Supervolcanoes

NASA scientists regard the risk of super volcanoes as higher than that of asteroids.

Yellowstone erupts at an interval between 600,000 and 800,000 years, and if it erupted today the result would be catastrophic for the world (decreasing global temperatures) and especially North America (depositing a layer of ash over most of the continent). Like Yellowstone, there are about 20 known supervolcanoes on the Earth.

Just as AI will help with many other forms of prediction, it may be that the data that already exists in the natural record of the earth—in geological records, in ice cores, in biological systems—could help us use AI to predict the next supervolcano.

It is ordinarily assumed that there’s nothing we could actually do about a super volcano, but that isn’t quite true. You’d want the help of an AI to help manage a wide range of NP-hard problems in resource allocation, migration, etc.

There are in fact a number of (admittedly speculative) mitigations that could be used if we knew a supervolcano was going to blow (a 2018 report identified 64 possible interventions). One of the possible interventions—in an interesting twist on the subject of climate change mitigation—could be tapping into the geothermal energy-generating potential of super volcanoes to simultaneously generate power, while also decreasing the temperature of the magma chambers.

But here’s the problem: there are only about 2,000 volcanologists in the world. And the proposed interventions would require vast engineering resources to understand and implement. AI could help multiply the problem-solving abilities of these volcanologists and engineers.

Yes, even with something as seemingly intractable as a supervolcano—AI is likely to be a powerful mitigation... but only if we keep investing in it.

Cosmic Doom

Sometimes, people worry over gamma-ray bursts as a potential existential risk: in only a few seconds they’d release more energy than our sun in its lifetime. If one happened close to us, the consequence would be severe.6

The risk of a gamma ray burst is probably low, but there are others: supernova explosions close enough to cause a problem (again, not too likely), a major coronal mass ejection from the sun (happen much more often, such as the Carrington Event in 1859). Even if life is not immediately harmed, the destruction of the modern electronic equipment and satellites our society depend upon would disrupt supply chains, interfere with medical care and cause widespread suffering.

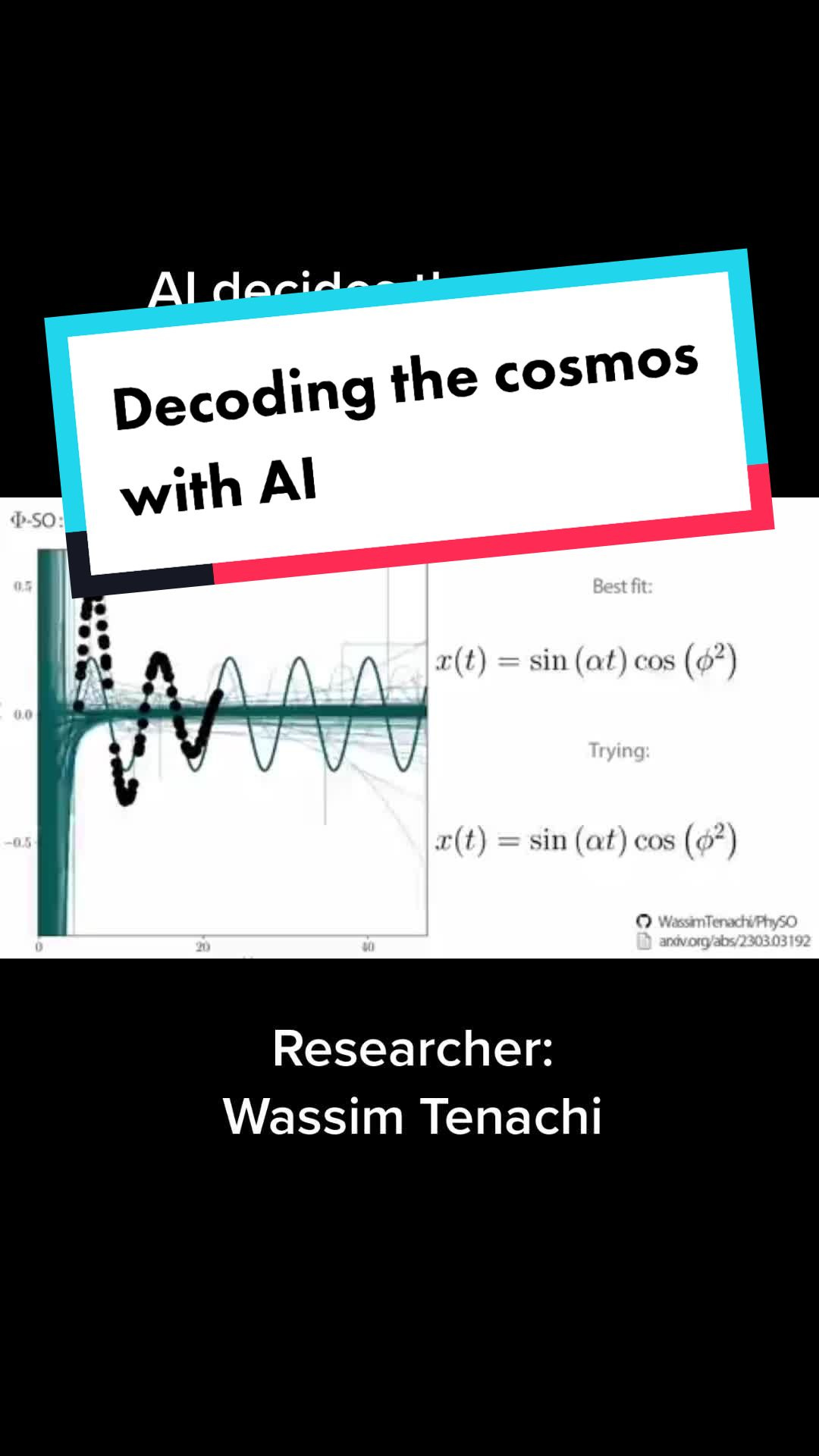

Here’s the thing: we just don’t know enough about the universe. There is an infinity of unknown unknowns, and we’ve only begun learning about the substance of reality.7 AI will help us know more. Maybe it will even help us probe completely unknown areas, like the potential existince of (and risks associated with) extraterrestrial civilizations.

It is the most powerful tool we have to understand the cosmos.

I find that AI is essential to facing the most important challenges facing civilization.

And perhaps AI will be critical to the most powerful mitigation of all: leaving the cradle of the Earth behind—helping us design new forms of spacecraft, materials and propulsion—enabling us to spread our species through the cosmos.

What do you think?

If you want some of my thoughts on some of the logical flaws I see in safetyist arguments, you can read this or this. I hope to elaborate in a future article. Other comments I like by others: David Deutsch on why proposal itself is problematic. Nobody can even define what “more advanced than GPT-4” even means or how it would apply to forms of AI that are not LLMs.

I am not linking to the well-circulated article in which airstrikes against GPUs were justified on the principle that I’m not interested in reinforcing it as a canonical article within AI safety. I think there are lots of researchers with well-intentioned and thoughtful approaches to AI safety who were taken aback by this. It doesn’t deserve more attention.

This clip from Dan Carlin’s Hardcore History may give you an appreciation for the total destruction of the Hiroshima bomb, but don’t listen to it if you might be deeply disturbed by descriptions of death and destruction. This entire series is a good listen to understand the depths of misery and destruction (as well as the courage) that humans are capable of when thrust into the gears of history.

Such as identifying radicalized groups, geopolitical threats, food shortages, climate-related crises, supply-chain issues, etc. See also: Identifying and explaining global events with Artificial Intelligence.

Some believe that the Ordovician extinction, the second-largest extinction event on the Earth, happening 440 million years ago—was caused by a gamma-ray burst.

One of the greatest thinkers of our time, David Deutsch, elaborates on this idea in Beginning of Infinity. He also recently commented about AI safety here, and here.

Thank you for enumerating several areas where access to advanced AI might help humans do better. I hear a lot of hand-waving about how such tools will trigger utopia, but those claims often stop short of any thoughtful detail. This is a helpful extension to the net-positive side of the argument.