Semantic Programming and Software 2.0

How my team built an RPG game in 1 day using new approaches to software engineering

My team1 won the AI Tech Week 2023 Virtual Worlds Hackathon, creating a fully-playable D&D-style roleplaying game called Tales of Mythos in the span of just one day.

This is part 1 of a 3-parter in which I’ll share the details, source code and architecture of what we built. In this first part, I want to share:

What we made

Why this is part of a new software development paradigm I’m calling “Semantic Programming” — a set of practices that you could put into place for building software today to create a massive increase in productivity—and involve non-traditional programmers into the software development process.

Tales of Mythos

The game we created—starting from scratch—is an RPG game loosely based on Dungeons & Dragons. In this game, you start by rolling up a character (we quickly generated your character after selecting a few “Tarot” cards to determine your destiny and a nemesis character) and then you begin your adventure.

Some of the technologies we used included:

Anthropic AI’s Claude language model, operating with the 100K context limit. Because of the high limit, we could create a game where the story could lead you anywhere—yet maintains a high level of consistency and a startling ability to recall much-earlier episodes of the story.

Blockade Labs skybox generator: to create dynamic 3D scenes wherever you go—without needing the game to understand what the settings would look like in advance.

Scenario to create the 2D portrait of your character and other NPCs.

Semantic Programming

Andrej Karpathy laid out a vision for “software 2.0” that predicted a software development model in which traditionally-coded software would increasingly be replaced by components containing neural networks:

We approached this by using a language model that operated as the functional equivalent of “subroutines” that you’d normally write code for. For example, our character creation system was managed entirely by an interaction with Anthropic’s Claude LLM.

To make this work, we focused on engineering prompts to deliver the desired behavior, while also designing data tagging specifications for how we wanted the inputs and outputs to work. We chose XML for this purpose.

The client-server architecture we designed was implemented by various components—the Beamable backend and elements of the UI in Unity—to interoperate with each other while maintaining consistency with how these systems work.

NLP-XML Subsystems

I call these components “NLP-XML subsystems” (Natural Language Processing with XML) because the natural language for these components replaces traditional coding, yet it uses XML2 as a means of consistent information exchange:

NLP-XML subsystems are excellent for:

Things that need to work with natural language (such as storytelling systems in a roleplaying game)

Things you want to rapidly prototype (perhaps with an eye to rewriting them as traditional code later—once the features and requirements solidify)

Language Model

I’ve previously created RPG and adventure-style games using ChatGPT.

The main limitation of this approach is that the game tends to lose its memory, and it is hard to define game subsystems that work well together—so it is hard to make anything that’s truly cohesive enough to be a “game.”

This is largely improved by the huge token limit available to Claude; but the real magic happens when you prompt the LLM to encode its responses according to the NLP-XML paradigm I described above. It also lets you easily isolate functionality and test it from a chat interface—effectively making the chat system a type of IDE within an overall project:

Here is a closer look at how the higher levels of software interact with the style of document responses shown above:

In the above example, it illustrates how:

The NLP-XML subsystems can be used to power traditionally-coded software (such as the user interface we created in Unity)

And can also be used to chain between different generative AI modalities (such as Scenario for the portraits of characters you encounter, and Blockade for the creation of unique 3D skyboxes of areas you explore).

The Semantic Web

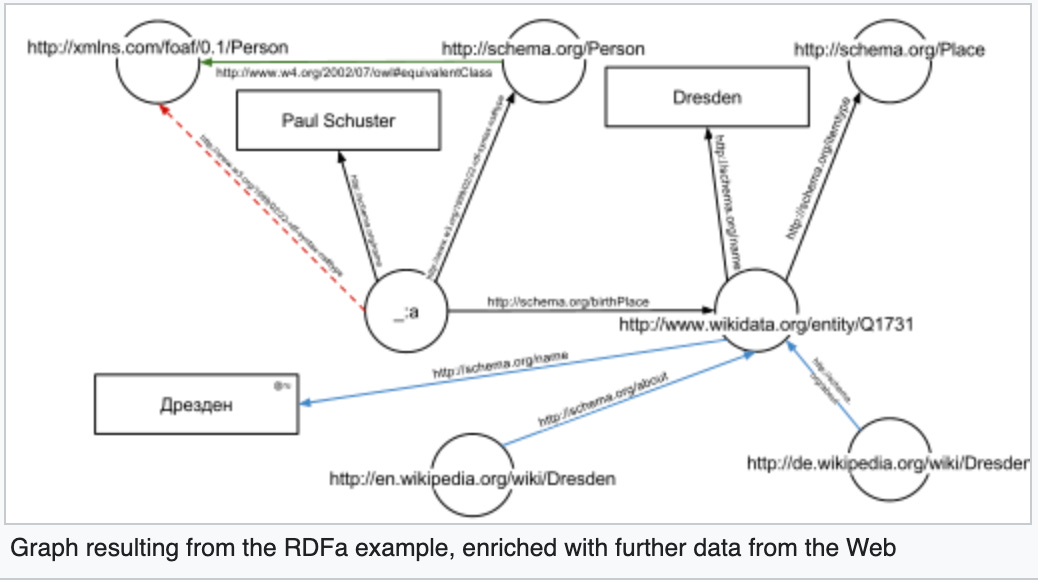

It has long been Tim Berners-Lee’s hope to create a “semantic web” where data and applications on the World Wide Web could interoperate via well-defined schemas.

Unfortunately, the semantic web never really caught on. However, artificial intelligence may supply us with the “translation layer” that could actually allow semantic applications on documents to connect with each other.

More pragmatically, this translation layer can interconnect software modules—vastly accelerating rapid prototyping—within teams.

Part 2: Open Source the Prompt

In part 2, I will open-source the prompts we used in the project—and discuss some other areas we’re exploring such as methods for optimizing some of the generative elements (using vector databases and search) and multiplayer functionality.

Please subscribe to my Substack to get an update when I’ve had a chance to pull together the GitHub and write the overview:

Our team included myself (game design, prompt engineering, XML specifications), Ali El Rhermoul (CTO of Beamable, coding rockstar), Dulce Baerga (generative art used in our character creator), Fabrizio Milo (experimented with generative music and audio).

Some may wonder why we used XML instead of something like JSON. JSON would probably be fine for many use cases, but we found that XML encoding was much more robust when it comes to using off-the-shelf parsers, which tend to deal with malformed and imperfect XML better than JSON.

Is this game available anywhere? Would love to try it

I'm just here from an old article you wrote on chat gpt games. I do like to occasionally do a constrained text adventure like you did before similar to zork, but I favor open ended RPGs with chatgpt. Currently messing around with a colosseum game, focusing on narratively based combat and progression. It's amazing