GPT-4: Day Zero

One small step for a company, one giant leap for machines

I read the GPT-4 paper1 so you don’t have to.

But you should read it (and if you feel you need more background, I have an article to help with that).

It’s hard to believe that ChatGPT was released on November 30, a mere 15 weeks ago. Roughly a quarter of a human year.2 A bit more than a dog year.

That’s the pace this technology is improving at.

Let’s discuss a few of the immediate take-aways.

Superior Code Generation

GPT-4 is getting much better at generating code. Many programming tasks are going to be replaced completely by text-based interfaces in the near future. Here’s an example of how Pong was created in less than 60 seconds:

![[video-to-gif output image] [video-to-gif output image]](https://substackcdn.com/image/fetch/$s_!A7Up!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F0e414ad3-ed16-4803-b959-42c63d698798_600x418.gif)

Granted, Pong is a relatively simple game. But the trajectory we’re on is clear. Last year, I said that games at the sophistication level of Elden Ring would be made by an individual creator with an AI assistant within a decade (a lot of people told me I was crazy). Now, it feels like I overestimated the timeline by an embarrassing amount.

Superior Standardized Test Performance

GPT-4 significantly outperforms GPT-3, and is comparable to top-performing humans on a wide range of tasks. For example, it has gone from passing the bar exam at the bottom 10% — to passing it at the top 10%.

Programmers: looks like this version of GPT isn’t quite ready to take your jobs yet. It performs pretty poorly at the Leetcode tests. I wonder how that will change by GPT-5 or GPT-6, though?

Automating Creativity

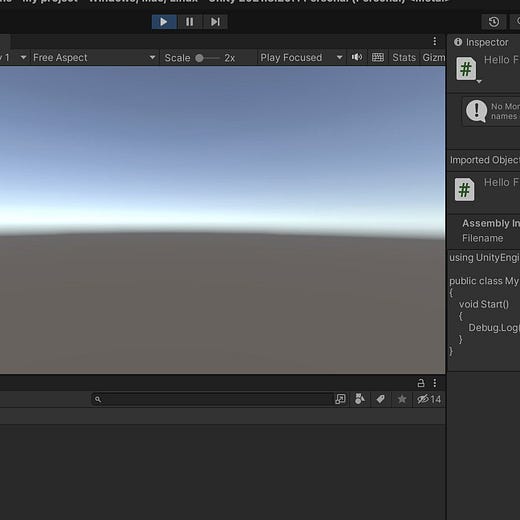

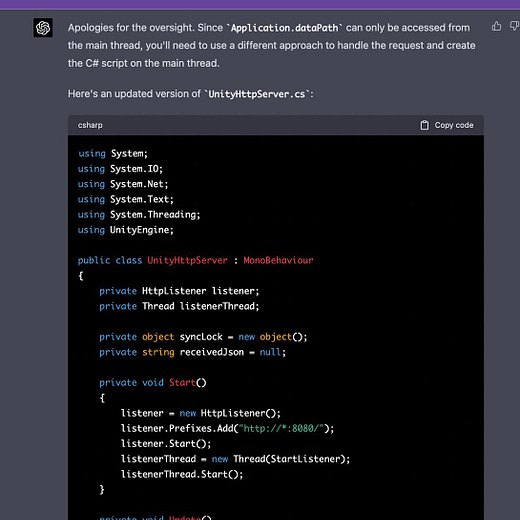

But Leetcode performance may not be what’s immediately important; instead, it may simply be putting the power of programmatic creation into the hands of people who would otherwise need to hire teams or learn a great deal of skills they don’t understand. Tejas Kulkarni was able to implement a plugin for Unity in a couple hours, despite being new to Unity and C# programming:

Integrating the Modes of Experience

GPT-4 supports reasoning from imagery.

We don’t think simply in language or text; we think with all of our senses. We integrate all of our inputs; we simulate—we predict. Vision is far older than language, having evolved with the eye, long epochs before the tongue existed.

GPT-4 evokes an older stage in the evolution of intelligence—and then recombines it with the power of language:

For now, it is “only” 2-dimensional images.

Soon, it will perform predictions in the realm of 3-dimensional spatial computing.

And then the 4th dimension of time: where video and immersive real-time virtual worlds and augmented reality will unlock another set of information at the heart of existence.

And then it will explore all the other dimensions of experience beyond vision, and the dimensions of possibility beyond that: dimensions not of reality as we know it, but reality is it might be—perhaps eventually capturing the totality of Borges’s Garden of Forking Paths.

With slow precision, he read two versions of the same epic chapter. In the first, an army marches into battle over a desolate mountain pass. The bleak and somber aspect of the rocky landscape made the soldiers feel that life itself was of little value, and so they won the battle easily. In the second, the same army passes through a palace where a banquet is in progress. The splendor of the feast remained a memory throughout the glorious battle, and so victory followed.

— Jorge Luis Borges

The Next Frontier: Cosmic Comprehension

Let’s talk about something that isn’t part of GPT-4… yet.

As humans, we think our languages are pretty cool. And I’ll agree that getting machines to understand our messy, syntactically-inconsistent and metaphor-laden languages has been a hard problem.

But understanding human language isn’t that cool.

You know what’s cool? Understanding the language of the cosmos.

![[video-to-gif output image] [video-to-gif output image]](https://substackcdn.com/image/fetch/$s_!t_sN!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F2124218c-e6f9-42e7-a6fd-3db34c3837f5_480x270.gif)

The above is the sort out output you can surface using the symbolic regression technology invented by Wassim Tenachi. I discuss it in Generative AI and True Innovation. In short, it figures out physical laws from data—not as a black-box, like most neural networks—but as a set of rules that describe our universe.

Symbolic regression is powerful—but currently works as a standalone tool. Soon, the internet-of-AI will weave this and tools like it, fusing them with the framework of natural language—and the language of all senses and all things.

The cosmos awaits.

Or is it the meta-cosmos?

Further reading

Composability is the most powerful creative force in the universe.

Generative AI and True Innovation

I also created a follow-up to capture your own GPT-4 observations: GPT-4: The Morning After - Open Thread.

After posting this, a number of people commented that it isn’t a “paper.” OK, sure — it is just a “technical report.” I find these definitional arguments a bit distracting from the substance of GPT-4’s impact. But I’ll certainly agree that I’d prefer more advanced AI work to be built in public. Decentralized AI will be a good future topic for me to discuss.

Of course, it is the result of many years of work along the way: it didn’t spring from Zeus’s brow. The point here is the absolute rate-of-change—the derivative of marked progress—not a measure of the total time investment.

![[video-to-gif output image] [video-to-gif output image]](https://substackcdn.com/image/fetch/$s_!oVLS!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fb8d87d36-55ae-47dd-b391-ba44f9124c07_600x337.gif)

I made that same within-a-decade prediction in a conference talk early last year.

I posed the question in a Q&A at a panel at SXSW earlier this week, three of the four panelists said within 5 years; one said in TWO years.

I think Chomsky summed this up quite nicely

Doesn't think, doesn't reason and doesn't understand. Has no workdy knowledge, just statistical likelihoods of word sequences in context of other word sequences. The illusion of understanding. Doesn't matter how large you scale it, it will never think or reason.

https://web.archive.org/web/20230310163612/https://www.nytimes.com/2023/03/08/opinion/noam-chomsky-chatgpt-ai.html