Web Renaissance, Part 2: How the Web Could Eat Software

WebGPU, WebAssembly, and the possibilities for Games

This is the second in a three-part series about the imminent disruption of the Web—as well as the broader Internet. This post is heavily informed by my experience in games. If you're new here, thank you for reading! My articles cover games, gametech, virtual worlds and the intersections with artificial intelligence, spatial computing and the metaverse.

Web browsers have sought to become the general portal through which all software is accessed—yet this has never come to be.

The key constraints are poor performance and poor packaging. This article will discuss new technologies that seek to change this:

WebGPU

WebAssembly

Progressive Web Applications (PWAs)

Games are a good example of how the Web has lagged far behind other platforms in delivering high-performance software. And the technology that games use are a precursor of the myriad spatial computing, scientific visualization, simulation and metaverse products that are on the way.

tldr: technical improvements in WebGPU, WebAssembly, and PWAs allow for software that’s much more performant and application-like inside your web browser. This is especially relevant to games and spatial applications. If you want to understand why this is the case, read on; if you want to focus more on the business implications of all these changes, move on to Part 3 (coming soon).

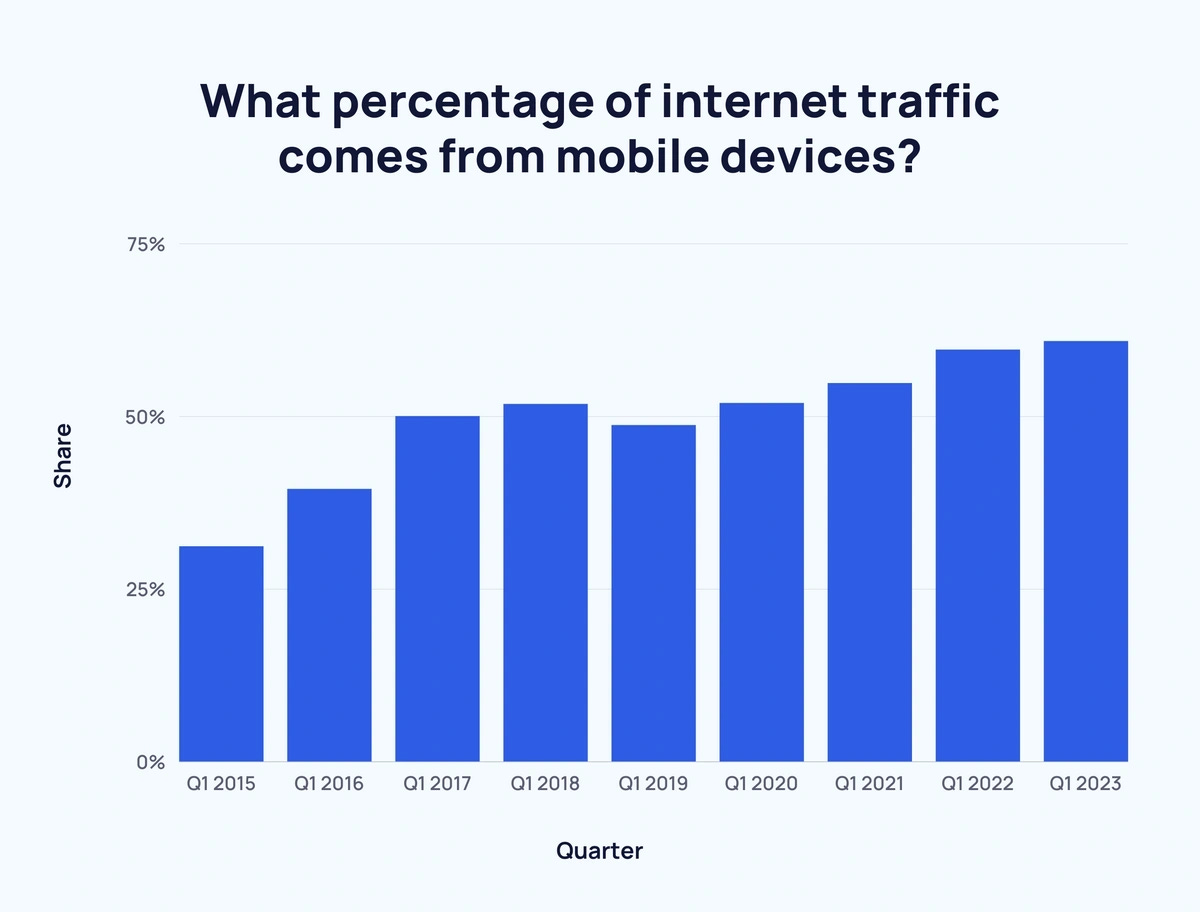

Mobile Software Distribution

Mobile devices account for nearly 60% of website traffic. So when we’re talking about software delivery over the Web, we’re mostly talking about mobile devices.

Furthermore, it is mobile users and mobile developers who will benefit most from web-based software delivery—for the simple reason that the mobile app stores control software distribution, impeding innovation through their fees and restrictions.

WebGPU

One of the most important catalysts of progress is WebGPU, which is a new graphics API supported by all the major Web browsers.

WebGPU dramatically improves the performance of graphics-intensive software on the Web. This is because it allows Web software to access graphics hardware more directly.

Brief History of Graphics APIs

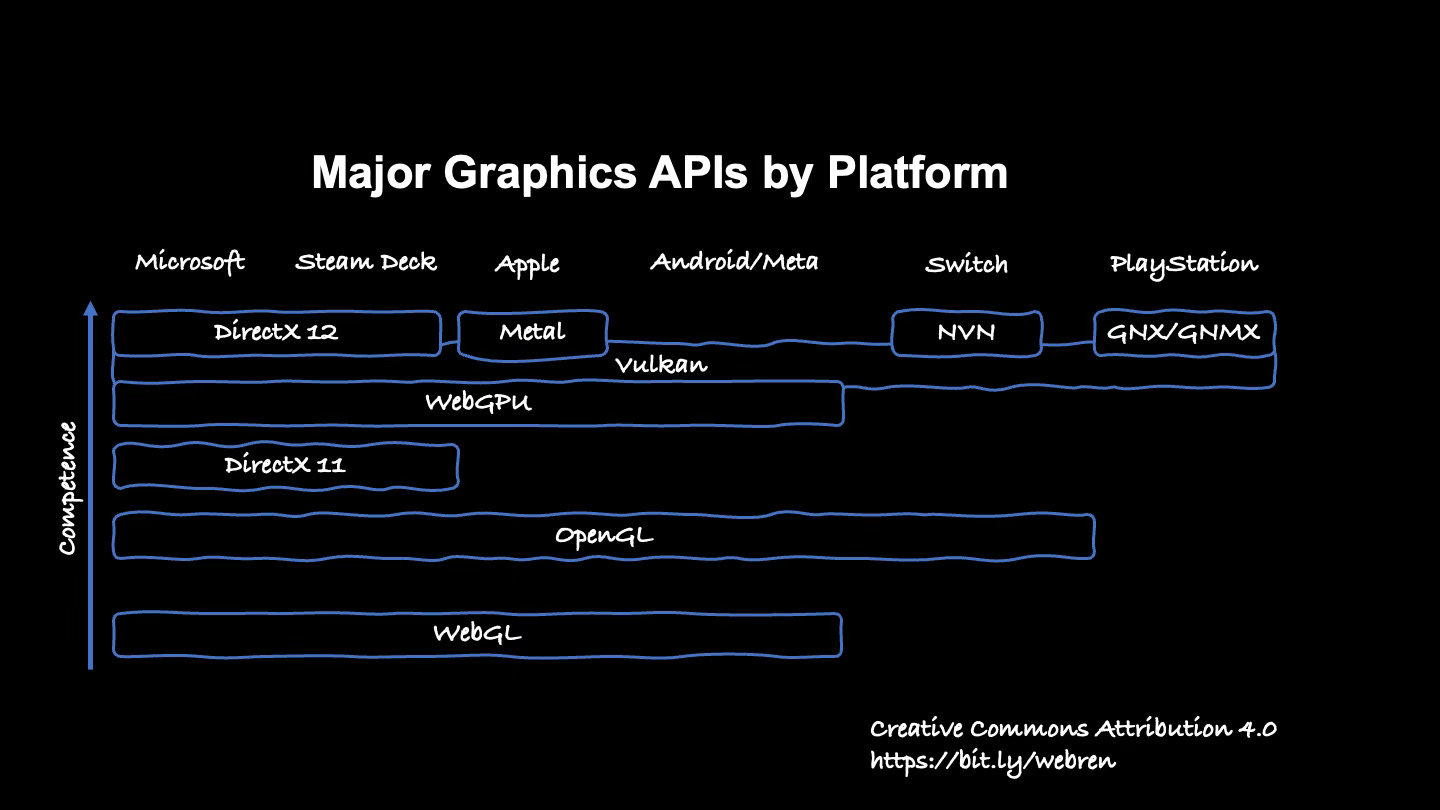

To understand the improvements of WebGPU over time, it’s instructive to take a quick tour of the various graphics APIs that have existed:

If you go way back to the 1980’s, there were graphics APIs like PHIGS and IRIS GL, the latter created by Silicon Graphics, an early leader in graphics-intensive computer workstations. By 1992, this was overhauled and replaced by OpenGL, an open-standard for graphics across different computing architectures.

It is important to understand that at this time, GPUs did not exist. Computers used graphics coprocessors with a fixed number of functions, which meant you were limited to whatever the built-in graphics functions were. If you wanted to do anything more dynamic, you’d rely on the CPU for programmability, and had to shuttle memory back-and-forth in a highly inefficient manner.

When NVIDIA marketed the “world’s first GPU,” GeForce 256 in 1999, it included the innovation of programmable shaders1—custom code you can operate in parallel on the chip. The ability to execute your own graphics and parallel-processing code rapidly accelerated graphics performance. This was also a new architectural paradigm. This required a lot of contortions within OpenGL, designed in the era of fixed-function coprocessors, and it rapidly began to show its age.

With the emergence of programmable shaders, a number of other graphics APIs were invented that optimized performance on new hardware. By organizing the APIs around the architectural changes that happened since the advent of GPUs—along with lower-level access to hardware, the new APIs were significantly more performant than OpenGL. DirectX 11 was released by Microsoft in 2009; it continues to be a popular API for building games, because it is a high-level API that’s relatively easy for programmers to work with (and is widely supported by older hardware). However, Microsoft released a more performant version (DX 12) which provides more direct access to hardware.2 Similarly, Apple created the Metal API for their ecosystem, optimized for Mac, iOS and visionOS devices.

Vulkan was released in 2016 as a successor to OpenGL to address its shortcomings: it was a much lower-overhead API that provided more direct access to hardware. is cross-platform (meaning you can write-once and run across most hardware platforms), yet has performance that is often comparable to the hardware-specific APIs. Vulkan is how graphics-intensive Android devices (including products like the Meta Quest series) can deliver high performance, and is often comparable on both Windows and Apple devices.3

The Web has been stuck with WebGL for many years. It is an old API based on a restricted subset of OpenGL. It inherited OpenGL’s dated architecture and many of its performance issues. It’s also more limited than OpenGL, and although it could use the shader programming language (GLSL) available to OpenGL, this was severely limited due to a range of security and cross-platform issues. In particular, you can’t create compute shaders (needed for ML/AI applications) in WebGL.

Nanite and Lumen

For a specific example of the sort of advanced graphics you can achieve with modern graphics APIs, let’s consider the Nanite and Lume technologies included in Unreal Engine 5.

Nanite lets developers create objects with an unlimited geometry, which automatically renders objects optimally however they’re placed into a scene (saving developers from painstaking optimization and polygon-reduction tasks).

Lumen is a global illumination system that can light scenes properly in real-time. From end-users, this means a much more dynamic experience: scenes that change as light sources move around a scene. But it isn’t just for end-users: Lumen saves developers from “baking” their environments (a time-consuming task you need to do in advance, interrupting the flow of development).

Nanite and Lumen work on DirectX 12 and Vulkan, but not the older mid-level APIs; this is a good illustration of the innovations that are possible when developers gain more-direct access to GPU hardware. Lumen wasn’t feasible on OpenGL or older versions of DirectX—let alone WebGL.

From WebGL to WebGPU

WebGL never quite sparked an explosion of games on the Web, due to the significant performance differences—although it did promote a lot of Web-based scientific visualization and especially geospatial applications.4

WebGPU vastly improves upon WebGL. It isn’t just an incremental improvement; it is informed more by modern hardware and APIs like Vulkan, rather than aging OpenGL that WebGL was based on.

WebGPU is a cross-platform graphics API, built for modern graphics hardware architectures. It is low-overhead and highly performant. It also includes security features that make it usable in web software: limiting data access to the web page it is running on, and validating commands before they’re sent to the GPU.

The result is that WebGPU performance in graphics applications approaches the performance of modern graphics APIs like Vulkan. Here’s a demo showing the increase in performance from 8 fps to 60 fps when switching from WebGL to WebGPU:

WebGPU brings programmers closer to the hardware, while not compromising on security or cross-platform support. And unlike WebGL, WebGPU supports programmable shaders (WebGPU Shading Language, or WGSL). This unlocks a huge amount of advanced capabilities for 3D engines, custom graphics-intensive applications, and computation—one could compare this to the improvements in games that happened between the GPUs that existed before the GeForce 256 introduced programmable and now. And just as Lumen added real-time raytracing to Unreal Engine 5 once graphics APIs allowed for more-direct hardware access, WebGPU ought to similarly unlock a whole new domain of high-performance graphics systems on the Web.

3D Engine Support for WebGPU

3D engines have several important jobs: they provide an abstraction layer for the various graphics APIs described earlier; they provide a workflow that helps you do most of the work involved in composing a graphics-intensive application without much coding; and they provide interoperable ecosystems of asset stores (plug-in code and graphics objects) that let you assemble products more rapidly.

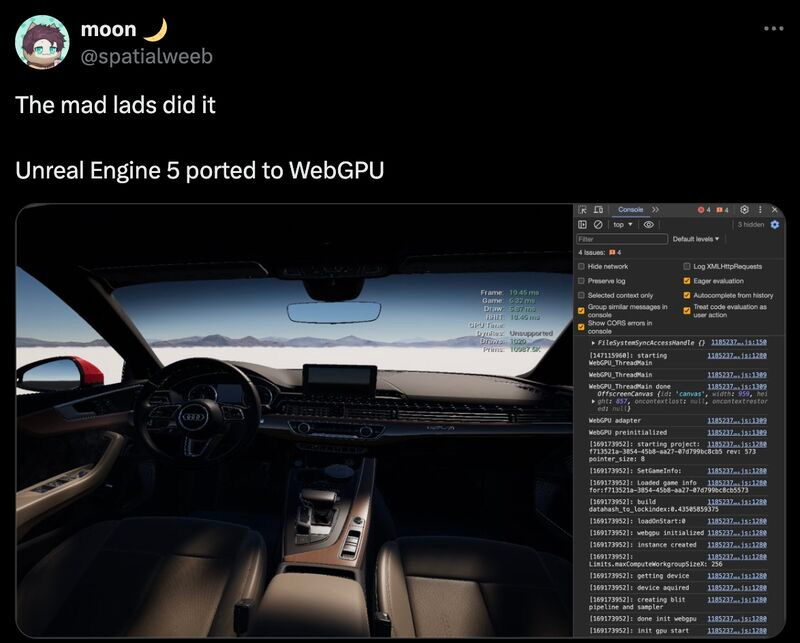

Unity has announced support for WebGPU, and there’s already a build of Unreal 5 that works with it (albeit without support for Nanite and Lumen in its current version). Web-native 3D engines like Babylon.js have also announced support, and there’s a WebGPU backend for the popular Three.js graphics library used by many Web developers.

Wonder Interactive, a startup building for the immersive Web, has gotten Unreal 5 to work with WebGPU:

Compute Shaders

As noted earlier, shader programming is not just about rendering. Compute shaders also allow for parallel processing applications, where software runs on the GPU hardware but isn’t necessarily about graphics. This is how GPUs came to be used for machine learning and artificial intelligence.

Although more recent versions of OpenGL added support for compute shaders, it was always an awkward and less-efficient programming structure, and never made it into WebGL. DirectX, Vulkan and Metal all allow compute shaders; like Vulkan, which influenced WebGPU, you can also make software that utilizes compute shaders.

As a result, future applications on the Web will be able to perform ML/AI tasks on your device rather than depend on cloud-based inference.

As I noted in Web Renaissance Part 1: The Great Rebundling, LLMs are moving closer and closer to the user. Arc Search uses LLMs to provide faster time-to-knowledge—but it is still cloud-based generative AI. There’s a furious pace of development around open-source AI models, which means that the next step could be delivering models in-browser, allowing your own computer to do more work (which in-turn ought to open up more agentic capabilities that incorporate your personalized data in a secure manner).

WebAssembly

WebAssembly (Wasm) is a technology for shipping bytecode—rather than interpreted languages such as Javascript—to your Web browser. WebAssembly can perform at near-native speed for CPU-oriented tasks. Programs written for WebAssembly can use any language: C, C++, C#, Rust or anything else, assuming someone writes a cross-compiler. It has been present and available in most browsers, including mobile browsers, for several years.

In principle, Wasm does for CPUs what WebGPU accomplishes for the GPU. Together, it means that large and complicated programs can be shipped. Just at WebGPU is important for 3D engines to target graphically-intensive games for the Web, the engines need to support WebAssembly as well. Fortunately, Unity supports Wasm via Emscriptem (a cross-compiler), and Wasm for Unreal Engine is being done within the community.

Progressive Web Applications

Ideally, software distributed through the Web ought to function more like an “app” — allowing access to native features, running in the background, accessing notification methods, and enabling offline functionality. This is precisely what Progressive Web Applications (PWAs) aspire to do: repackaging web pages into web applications that function more like the software you’re accustomed to.

As noted above, web software largely means mobile. Here’s an example of an FRVR game running as a Progressive Web App on my iPhone:

There are a number of advantages to implementing as a PWA. One of the largest is that these applications exist as standalone Home Screen applications with their own icons and appear independent from the web browser as you switch between applications.

PWA’S also support local storage, push notifications and background execution. And because you’re operating within your own website, you’re free to monetize your application however you want, whether that means ads, using Stripe or another card processor for purchases, or Web3; you won’t owe anything back to Google or Apple.

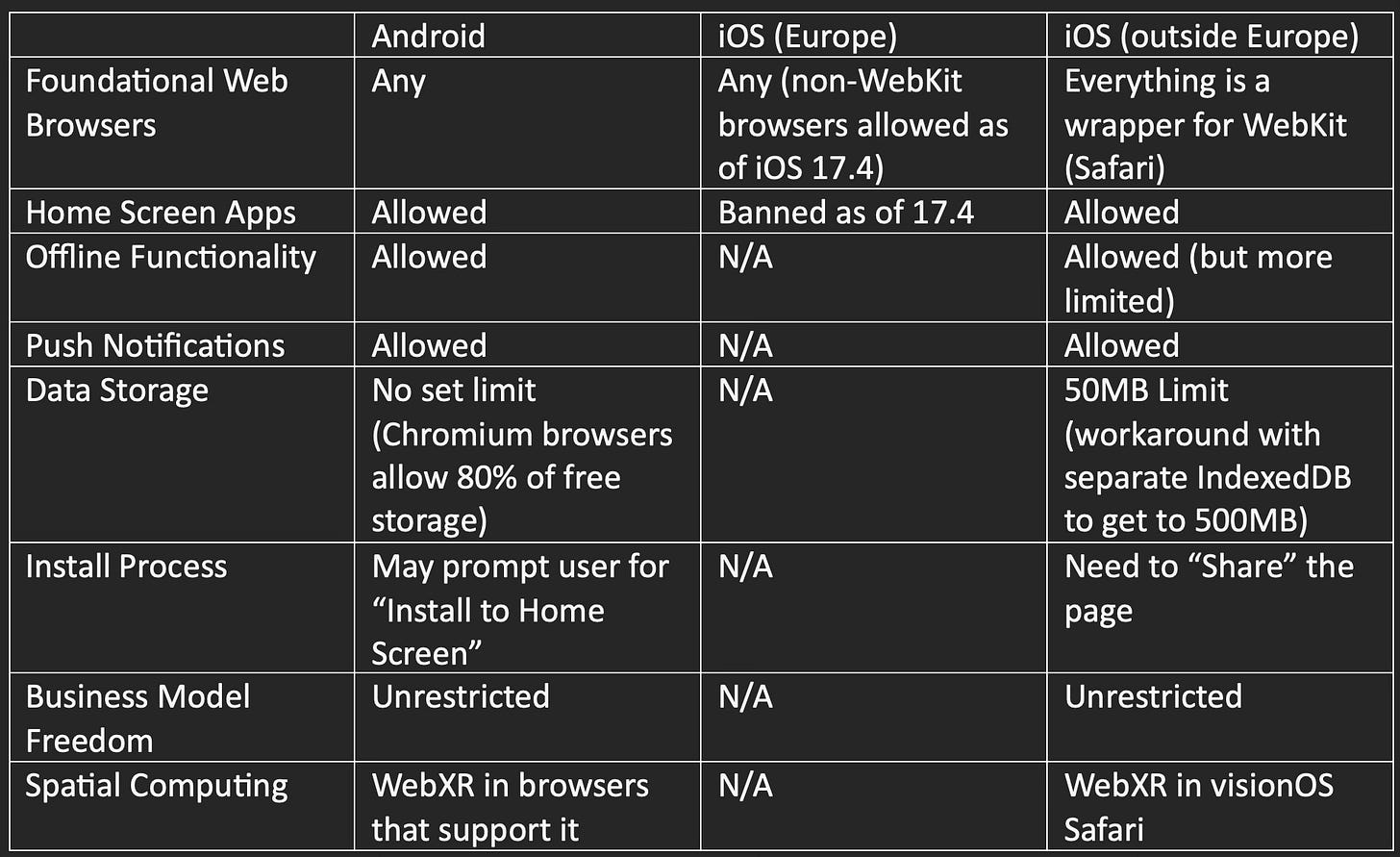

Historically, Apple has been quite a bit slower in embracing all of the features of PWAs compared to Android. For example, push notifications became supported on iOS only recently. And whereas Android supports a “Click to Install” user interface prompt, iOS Safari does not allow it.

Another big difference is that on Android, you can install any web browser you want. But on iOS, you might have installed Chrome or Firefox, but you’re actually using a wrapper for WebKit, which is what enables Safari. This recently changed in Europe due to the Digital Markets Act, where Apple must now permit non-WebKit browsers. And they’ve complied with that as of iOS 17.4—but at the cost of banning PWAs.

Hopefully this will change with time, and Europeans will be able to access PWAs again. And hopefully Apple won’t extend this ban to the rest of the world. PWAs are not allowed on visionOS anywhere. Hopefully that will change too. That’s a lot of hope to go around… And some, like Tim Sweeney (CEO of Epic Games, the creator of Unreal Engine), have speculated on why:

Here’s a summary of how mobile PWAs work across different devices:

Spatial Web Apps

The WebXR standard allows Web pages to display immersive 3D applications that can be experienced on a 2D screen—or ideally, using a virtual reality device. WebXR is supported on visionOS (somewhat half-heartedly, I’ve found that it freezes constantly). It works better on Meta Quest and other spatial devices that include a Chromium browser.

There is already work towards a WebXR-WebGPU binding, so the performance and beauty of WebXR is likely to improve substantially. However, spatial computing involves a lot more than rendering: many spatial applications depend on what your cameras see—image recognition, gesture recognition, depth recognition, etc. WebGPU might come to the rescue here as well, since the machine learning models that enable much of this could be loaded into your device’s hardware—but these will often be large, complicated pieces of software that may not work as seamlessly as a native experience. Time will tell. Nevertheless, it’s a frontier for further innovation.

Optimizing Game Load Time

But the Web introduces new problems: in particular, games have gotten huge. One of the largest, ARK: Survival Evolved, weighs in at 250 GB of storage. Baldur’s Gate 3, 2023’s Game of the Year, is 150 GB. Most developers are accustomed to shipping large sets of files that stay on your device.

With the Web, it’s important to get people playing quickly. Every second of waiting means they might get distracted and move onto something else—whereas with a AAA game you’ve purchased, you’ll be willing to wait to get updates and download all the content in advance.

Unity attempted to solve the problem with a technology called Addressables, but unfortunately it doesn’t scale well and few developers have found it to be useful in large games. Many have resorted to building their own alternatives.

Many mobile developers have learned the tricks of shipping a small binary and downloading content afterwards. Some have even learned how to stream content in the background as you continue to play. These skills are less about the inherent technology of the Web, and more about building games that are truly for the Web—3D engines and content management technologies need to improve to make it easy to build games this way.

The Web Renaissance

All of these technologies—WebGPU, WebAssembly, PWAs and WebXR—promise to enable web browsers to performantly and securely deliver applications that were previously the domain of operating systems. This is an exciting frontier where developers have the freedom to create more applications that reach more people while keeping more of the upside.

If you didn’t read the first part of this series, you’ll find Web Renaissance Part 1: The Great Rebundling, where I discuss how AI and large language models are in the process of disrupting the entire way you use web browsers and discover content.

Part 3 will deal with how the business of marketing, payment systems and software distribution is going to change as a result of the Rebundling and the technological changes I’ve covered. It’s coming soon, so subscribe for when I post it:

If you want a much deeper-dive on the history of programmable shaders, what they can do and how they work, Introduction to Compute Shaders is a good write-up.

As with anything in gaming, this is open to debate. There are cases where DX 11 is actually more performant than DX 12, although this is usually due to bugs or translation-layer issues. If you search on things like “Should I use DX12 or DX11 on my Steam Deck for Witcher 3” (Steam Deck is really a Linux device, which makes it a special case of Windows compatibility, using Proton) you’ll see lots of commentary on this sort of thing. But generally speaking, when DX12 is used properly, and without excess translation layers like Proton, it is often the more performant.

Once again, actual results will vary fur a huge number of reasons. Here’s an example comparing Baldur’s Gate 3 performance between Vulkan and DirectX 11 choices (they don’t support DX 12). There are cases where the older, mid-level DX 11 can somewhat outperform Vulkan. But Vulkan is typically better on more recent hardware.

That said, you could get a game to operate pretty well with WebGL, if your graphics rendering needs were somewhat limited and the customer’s hardware was sufficient. When we developed Star Trek Timlines, we got it running on the Web with WebGL, and it could perform adequately on good hardware. The most noteworthy user of WebGL is probably Google Maps, which was able to go from an earlier version that streamed pre-rendered tiles to something that delivered much-more-fluid realtime map graphics.

Thanks for writing this. I recently removed web support from my new game on Steam as it was just too ‘shonky’ compared to binary downloads. I’m very much looking forward to more congruence in this area.